Some time ago, in a job far far away (not that far away), I saw some JavaScript code that looked like this:

;(function($) {

//etc…

}(jQuery));

I wondered what this strange beast was and why anyone would write such code. It took me a little while to find out why, but now know and I thought I’d share it with the world. That reason is called the module pattern.

Why use the module pattern?

Imagine you are writing an application with lots of JavaScript. You could just write your JavaScript in one long stream, spaghetti-like fashion in a file, but there would be a few problems with this:

- You might pollute the Global Namespace, and there could be conflicts with other libraries or scripts your app makes use of

- You might have made some piece of code that shouldn't have been -- like a connection to an API -- publicly accessible with one simple function call to the console

- Your code will be disorganized and will get harder and harder to manage

Here is an example of a piece of code that doesn’t follow the module pattern, or any other pattern, that might produce the above problems. It’s just some vanilla JS.

In the above example, “foo” might conflict with another variable named foo in the global namespace. Also, anyone can call “foo” by typing it directly into the browser console.

Enter the module pattern.

How it Works

The module pattern is named thusly because it is a design pattern. Design patterns, per a quick Google search, are “solutions to software design problems you find again and again in real-world application development. Patterns are about reusable designs and interactions of objects.”

Here is the same piece of code from above in a very plain module pattern format:

Basic Module Pattern

Here we are wrapping the same code from before in an anonymous function expression that executes immediately. You create one of these by declaring an anonymous function, adding the parentheses on the end to signal execution, and wrapping the whole thing in a set of parentheses to tell the interpreter this is an expression to be evaluated. This is called an Immediately-Invoked Function Expression (IIFE).

This code creates its own local scope, but then immediately executes our code, giving us the same functionality as before, but protecting it from the global scope.

There are a few shorthand ways to write the IIFE that will save yourself a couple bytes and might look a little cleaner. You can just remove the wrapping parentheses and use an exclamation mark or plus sign at the beginning of your function.

A few variations on this simple pattern make it even more useful. In the below example, we store our module in a variable for potential use in other parts of our application. If you are familiar with Ruby, you can see how the code below resembles a Class.

Module Export Pattern

Again, we get the benefit of protecting our code from the global scope through the use of a closure (and allowing us to use any variable names we want locally), but we are able to reuse it through the DogModule variable across our application.

Module Import Pattern

Similarly, we can import a module and extend its functionality through the module import pattern. To do this, we pass the module along as an argument to a function that has a parameterdefined. Typically, the argument and and parameter are named differently to highlight that the module is going to be changed or extended somehow, but it’s not necessary. The module is stored in the local scope thanks to the parameter, giving a small performance boost.

Module Export Pattern w/Loose Augmentation

One downside with this pattern so far is that our entire module must be contained in one file. However, we can use the addition of the loose augmentation pattern to free us from this constraint. The way this pattern works is it imports your module (see module import pattern above) if it has already been defined and “augments” it, otherwise, if it is the first file to be imported, it uses a new object to define the module.

TLDR?

- The module pattern makes sure your code doesn't interfere with the global scope.

- It allows you to extend the functionality of other modules.

- It's a powerful way of organizing your code.

Sources

http://www.adequatelygood.com/JavaScript-Module-Pattern-In-Depth.html

http://toddmotto.com/mastering-the-module-pattern/

https://teamtreehouse.com/library/the-module-pattern-in-javascript-2

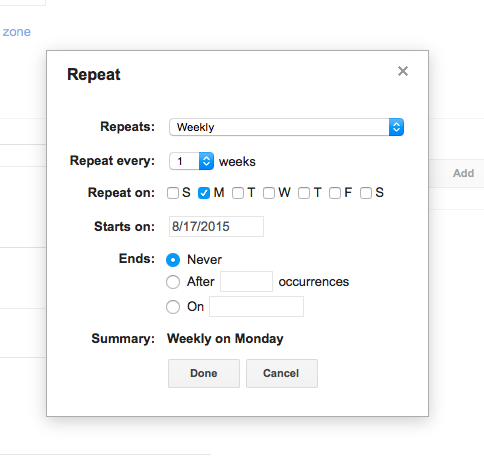

After looking at the Google Calendar interface, which seemed to implement a set of rules, we suspected that scheduling was a problem that has been dealt with before in programming land. We therefore took to the internet where we discovered

After looking at the Google Calendar interface, which seemed to implement a set of rules, we suspected that scheduling was a problem that has been dealt with before in programming land. We therefore took to the internet where we discovered